Stubsack: weekly thread for sneers not worth an entire post, week ending 22nd February 2026

-

who up continvoucly morging they branches

That slopped-out “diagram” plagiarised Vincent Driessen’s “A successful Git branching model”, BTW.

-

AI bros do new experiments in making themselves even stupider. Going from ‘explain what you did but dumb it down for me and my degraded attention span’ into ‘just make a simplified cartoon out of it’.

Proud of not understanding what is going on. None of these people could hack the Gibson.

E: If they all hate programming so much, perhaps a change of job is in question, sure might not pay as much, but it might make them happier.

E: If they all hate programming so much, perhaps a change of job is in question, sure might not pay as much, but it might make them happier.

Surely at least a few of them have worked up enough seed capital to try their hand at used-car dealerships. I can attest that the juicier markets just outside the Bay Area are fairly saturated, but maybe they could push into lesser-served locales like Lost Hills or Weaverville.

-

A little exchange on the EA forums I thought was notable: https://forum.effectivealtruism.org/posts/EDBQPT65XJsgszwmL/long-term-risks-from-ideological-fanaticism?commentId=b5pZi5JjoMixQtRgh

tldr; a super long essay lumping together Nazism, Communism and religious fundamentalism (I didn’t read it, just the comments). The comment I linked notes how liberal democracies have also killed a huge number of people (in the commenter’s home country, in the name of purging communism):

The United States presented liberal democracy as a universal emancipatory framework while materially supporting anti-communist purges in my country during what is often called the “Jakarta Method". Between 500,000 and 1 million people were killed in 1965–66, with encouragement and intelligence support from Western powers. Variations of this model were later replicated in parts of Latin America.

The OP’s response is to try to explain how that wasn’t real “liberal democracy” and to try to reframe the discussion. Another commenter is even more direct, they complain half the sources listed are Marxist.

A bit bold to unqualifiedly recommend a list of thinkers of which ~half were Marxists, on the topic of ideological fanaticism causing great harms.

I think it’s a bit bold of this commenter to ignore the empirical facts cited in how many people ‘liberal democracies’ had killed and to exclude sources simply for challenging your ideology.

Just another reminder of how the EA movement is full of right wing thinking and how most of it hasn’t considered even the most basic of leftist thought.

Just another reminder of how the EA movement is full of right wing thinking and how most of it hasn’t considered even the most basic of leftist thought.

I continue to maintain that EA boils down to high-dollar consumerism focused on intangible goods. I’m sure that statement won’t fly on LW or any other EA forum, but my thoughts on psychiatry don’t fly at a Scientologist convention either.

-

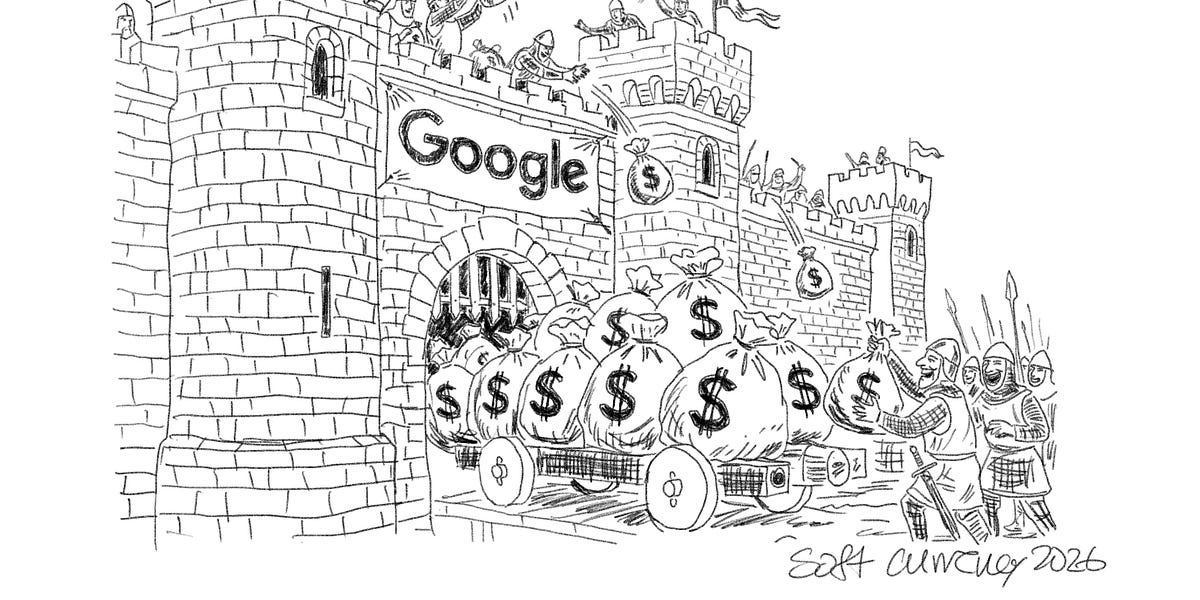

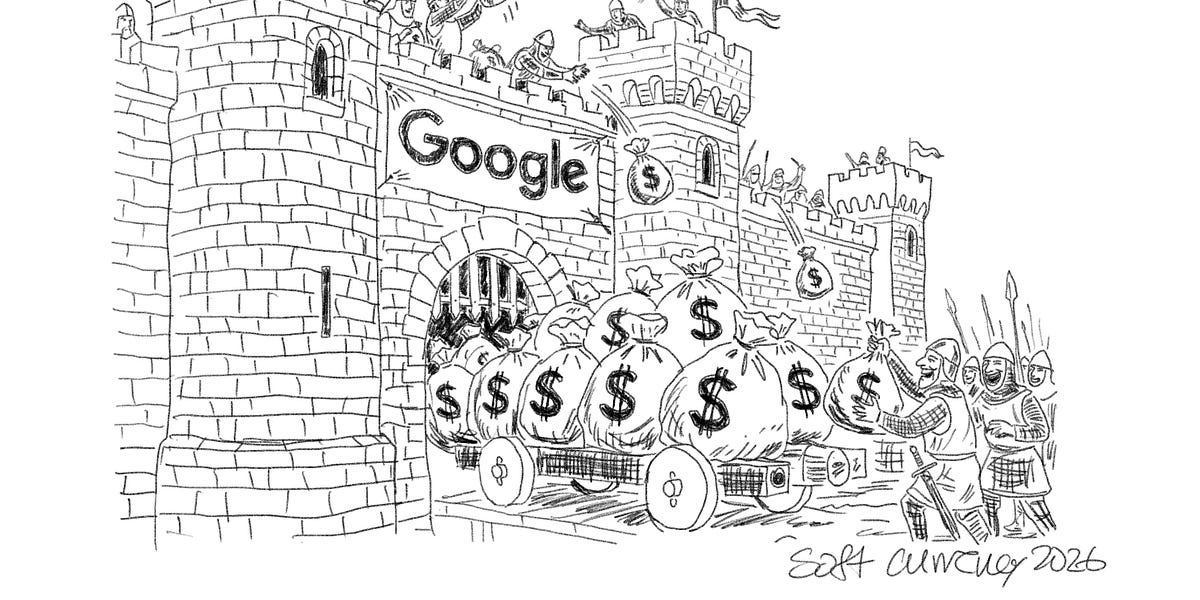

The Dangerous Economics of Walk-Away Wealth in the AI Talent War

Watch now | How firms are accidentally paying their best employees to become their biggest competitors.

(softcurrency.substack.com)

- Anthropic (Medium Risk) Until mid-February of 2026, Anthropic appeared to be happy, talent-retaining. When an AI Safety Leader publicly resigns with a dramatic letter stating “the world is in peril,” the facade of stability cracks. Anthropic is a delayed fuse, just earlier on the vesting curve than OpenAI. The equity is massive ($300B+ valuation) but largely illiquid. As soon as a liquidity event occurs, the safety researchers will have the capital to fund their own, even safer labs.

WTF is “even safer” ??? how bout we like just don’t create the torment nexus.

Wonder if the 50% attrition prediction comes to pass though…

the capital to fund their own, even safer labs.

I wonder, is this a theory of “safety” analogous to what’s driven the increased gigantism of vehicles in the US? Sure seems like it.

-

That slopped-out “diagram” plagiarised Vincent Driessen’s “A successful Git branching model”, BTW.

It’s funny that such a thing is rare enough in the corpus to come out so recognizably in the output.

-

This is why CCC being able to compile real C code at all is noteworthy. But it also explains why the output quality is far from what GCC produces. Building a compiler that parses C correctly is one thing. Building one that produces fast and efficient machine code is a completely different challenge.

Every single one of these failures is waved away because supposedly it’s impressive that the AI can do this at all. Do they not realize the obvious problem with this argument? The AI has been trained on all the source code that Anthropic could get their grubby hands on! This includes GCC and clang and everything remotely resembling a C compiler! If I took every C compiler in existence, shoved them in a blender, and spent $20k on electricity blending them until the resulting slurry passed my test cases, should I be surprised or impressed that I got a shitty C compiler? If an actual person wrote this code, they would be justifiably mocked (or they’re a student trying to learn by doing, and LLMs do not learn by doing). But AI gets a free pass because it’s impressive that the slop can come in larger quantities now, I guess. These Models Will Improve. These Issues Will Get Fixed.

Building a compiler that parses C correctly is one thing. Building one that produces fast and efficient machine code is a completely different challenge.

Ye, the former can be done in a month of non-full-time work by an undergrad who took Compilers 101 this semester or in literally a single day by a professional, and the latter is an actual useful product.

So of course AI will excel at doing the first one worse (vibecc doesn’t even reject invalid C) and at an insane resource cost.

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Also, hope you had a wonderful Valentine’s Day!)

-

who up continvoucly morging they branches

-

what I’m thinking about is for how many years now they have been promising that just one more datacenter will fix the “hallucinations”, yet this mess is indistinguishable from nonsense output from three years ago. I see “AI” is going well

you can count on microslop to always be behind the curve

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Also, hope you had a wonderful Valentine’s Day!)

A longread on AI greenwashing begins thusly:

The expansion of data centres - which is driven in large part by AI growth - is creating a shocking new demand for fossil fuels. The tech companies driving AI expansion try to downplay AI’s proven climate impacts by claiming that AI will eventually help solve climate change. Our analysis of these claims suggests that rather than relying on credible and substantiated data, these companies are writing themselves a blank cheque to pollute on the empty promise of future salvation. While the current negative effects of AI on the climate are clear, proven and growing, the promise of large-scale solutions is often based on wishful thinking, and almost always presented with scant evidence.

(Via.)

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Also, hope you had a wonderful Valentine’s Day!)

The phrase “ambient AI listening in our hospital” makes me hear the “Dies Irae” in my head.

-

can all of rationalism be reduced to logorrhea with load-bearing extreme handwaving (in this case, agentic self preservation arises through RL scaling)?

-

OT: I tried switching away from my instance to quokk.au because it becomes harder and harder to justify being on a pro-ai instance, but it seems that your posts stopped federating recently.

Which makes that a bit harder to do. Any clue why?

Which makes that a bit harder to do. Any clue why?bluemonday1984@awful.systems update: quokka reached out to me and apparently you had been banned on another instance for report abuse and that ban had synchronised to quokk.au. You should be unbanned now which means the next stubsack should federate again.

E: I do not know how tags work E2: why does that format to a mailto link?

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Also, hope you had a wonderful Valentine’s Day!)

new episode of odium symposium. we look at rousseau’s program for using universal education to turn woman into drones

-

The phrase “ambient AI listening in our hospital” makes me hear the “Dies Irae” in my head.

The phrase “ambient AI listening in our hospital” makes me hear the “Dies Irae” in my head.

I’m personally hearing “Morceaux” myself.

-

The Dangerous Economics of Walk-Away Wealth in the AI Talent War

Watch now | How firms are accidentally paying their best employees to become their biggest competitors.

(softcurrency.substack.com)

- Anthropic (Medium Risk) Until mid-February of 2026, Anthropic appeared to be happy, talent-retaining. When an AI Safety Leader publicly resigns with a dramatic letter stating “the world is in peril,” the facade of stability cracks. Anthropic is a delayed fuse, just earlier on the vesting curve than OpenAI. The equity is massive ($300B+ valuation) but largely illiquid. As soon as a liquidity event occurs, the safety researchers will have the capital to fund their own, even safer labs.

WTF is “even safer” ??? how bout we like just don’t create the torment nexus.

Wonder if the 50% attrition prediction comes to pass though…

So they’ve highlighted an interesting pattern to compensation packages, but I find their entire framing of it gross and disgusting, in a capitalist techbro kinda way.

Like the way the describe Part III’s case study:

The uncapped payouts were so large that it fractured the relationship between Capital (Activision) and Labor (Infinity Ward).

Acitivision was trying to cheat its labor after they made them massively successful profits! Describing it as a fracture relationship denies the agency on the Acitivision’s part to choose to be greedy capitalist pigs.

The talent that left formed the core of the team that built Titanfall and Apex Legends, franchises that have since generated billions in revenue, competing directly in the same first-person shooter market as Call of Duty.

Activision could have paid them what they owed them, and kept paying them incentive based payouts, and come out billions of dollars ahead instead of engaging in short-sighted greedy behavior.

I would actually find this article interesting and tolerable if they framed it as “here are the perverse incentives capitalism encourages businesses to create” instead of “here is how to leverage the perverse incentives in your favor by paying your employees just enough, but not enough to actually reward them a fair share” (not that they were honest enough to use those words).

WTF is “even safer” ??? how bout we like just don’t create the torment nexus.

I think the writer isn’t even really evaluating that aspect, just thinking in terms of workers becoming capital owners and how companies should try to prevent that to maximize their profits. The idea that Anthropic employees might care on any level about AI safety (even hypocritically and ineffectually) doesn’t enter into the reasoning.

-

can all of rationalism be reduced to logorrhea with load-bearing extreme handwaving (in this case, agentic self preservation arises through RL scaling)?

no there’s also racist twitter

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Also, hope you had a wonderful Valentine’s Day!)

need a word for the sort of tech ‘innovation’ that consists of inventing and monetizing new types of externalities which regulators aren’t willing to address. like how bird scooters aren’t a scam, but they profit off of littering sidewalk space so that ppl with disabilities can’t get around

EDIT: a similar, perhaps the same concept is innovation which functions by capturing or monopolizing resources that aren’t as yet understood to be resources. in the bird example, we don’t think of sidewalk space as a capturable resource, and yet

-

need a word for the sort of tech ‘innovation’ that consists of inventing and monetizing new types of externalities which regulators aren’t willing to address. like how bird scooters aren’t a scam, but they profit off of littering sidewalk space so that ppl with disabilities can’t get around

EDIT: a similar, perhaps the same concept is innovation which functions by capturing or monopolizing resources that aren’t as yet understood to be resources. in the bird example, we don’t think of sidewalk space as a capturable resource, and yet

parasitech?

-

parasitech?

I guess that doesn’t emphasise the “innovation” aspect much