Stubsack: weekly thread for sneers not worth an entire post, week ending 1st February 2026

-

Chris Lintott (@chrislintott.bsky.social):

We’re getting so many journal submissions from people who think ‘it kinda works’ is the standard to aim for.

Research Notes of the AAS in particular, which was set up to handle short, moderated contributions especially from students, is getting swamped. Often the authors clearly haven’t read what they’ve submitting, (Descriptions of figures that don’t exist or don’t show what they purport to)

I’m also getting wild swings in topic. A rejection of one paper will instantly generate a submission of another, usually on something quite different.

Many of these submissions are dense with equations and pseudo-technological language which makes it hard to give rapid, useful feedback. And when I do give feedback, often I get back whatever their LLM says.

Including the very LLM responses like ‘Oh yes, I see that <thing that was fundamental to the argument> is wrong, I’ve removed it. Here’s something else’

Research Notes is free to publish in and I think provides a very valuable service to the community. But I think we’re a month or two from being completely swamped.

One of the great tragedies of AI and science is that the proliferation of garbage papers and journals is creating pressure to return to more closed systems based on interpersonal connections and established prestige hierarchies that had only recently been opened up somewhat to greater diversity.

-

The Wikipedia article is cursed

Honestly even the original paper is a bit silly, are all game theory mathematics papers this needlessly farfetched?

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

Kyle Hill has gone full doomer after reading too much Big Yud and the Yud & Soares book. His latest video is titled “Artificial Superintelligence Must Be Illegal.” Previously, on Awful, he was cozying up to effective altruists and longtermists. He used to have a robotic companion character who would banter with him, but it seems like he’s no longer in that sort of jocular mood; he doesn’t trust his waifu anymore.

-

Kyle Hill has gone full doomer after reading too much Big Yud and the Yud & Soares book. His latest video is titled “Artificial Superintelligence Must Be Illegal.” Previously, on Awful, he was cozying up to effective altruists and longtermists. He used to have a robotic companion character who would banter with him, but it seems like he’s no longer in that sort of jocular mood; he doesn’t trust his waifu anymore.

Wasn’t he on YouTube trying to convince people that Nuclear Energy is Fine Actually? Figures.

-

Chris Lintott (@chrislintott.bsky.social):

We’re getting so many journal submissions from people who think ‘it kinda works’ is the standard to aim for.

Research Notes of the AAS in particular, which was set up to handle short, moderated contributions especially from students, is getting swamped. Often the authors clearly haven’t read what they’ve submitting, (Descriptions of figures that don’t exist or don’t show what they purport to)

I’m also getting wild swings in topic. A rejection of one paper will instantly generate a submission of another, usually on something quite different.

Many of these submissions are dense with equations and pseudo-technological language which makes it hard to give rapid, useful feedback. And when I do give feedback, often I get back whatever their LLM says.

Including the very LLM responses like ‘Oh yes, I see that <thing that was fundamental to the argument> is wrong, I’ve removed it. Here’s something else’

Research Notes is free to publish in and I think provides a very valuable service to the community. But I think we’re a month or two from being completely swamped.

people who think ‘it kinda works’ is the standard to aim for

I swear that this is a form of AI psychosis or something because the attitude is suddenly ubiquitous among the AI obsessed.

-

Kyle Hill has gone full doomer after reading too much Big Yud and the Yud & Soares book. His latest video is titled “Artificial Superintelligence Must Be Illegal.” Previously, on Awful, he was cozying up to effective altruists and longtermists. He used to have a robotic companion character who would banter with him, but it seems like he’s no longer in that sort of jocular mood; he doesn’t trust his waifu anymore.

kinda depressing seeing people fall for Yud’s shtick without realising about all the other bullshit (though in fairness the average person is not aware of the many years of rationalism lore). thankfully people in the comment section are more skeptical but still cautious, which I think is a fair reaction to all this

-

The AI craze might end up killing graphics card makers:

Zotac SK’s message: “(this) current situation threatens the very existence of (add-in-board partners) AIBs and distributors.”

The current situation is so serious that it is worrisome for the future existence of graphics card manufacturers and distributors. They announced that memory supply will not be sufficient and that GPU supply will also be reduced.

Curiously, Zotac Korea has included lowly GeForce RTX 5060 SKUs in its short list of upcoming “staggering” price increases.

I wonder if the AI companies realize how many people will be really pissed off at them when so many tech-related things become expensive or even unavailable, and everyone will know that it’s only because of useless AI data centers?

well with the recent Microsoft CEO statement on “we have to find a use for this stuff or it won’t be socially acceptable to waste so much electricity on it” they have some level of awareness, but only a very surface level awareness

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

OT: Insurance wrote my truck off. 16k in damage, like holy shit balls.

-

that post got way funnier with Eliezer’s recent twitter post about “EAs developing more complex opinions on AI other than itll kill everyone is a net negative and cancelled out all the good they ever did”

Quick, someone nail your 95-page blog post to the front door of lighthaven or whatever they call it.

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

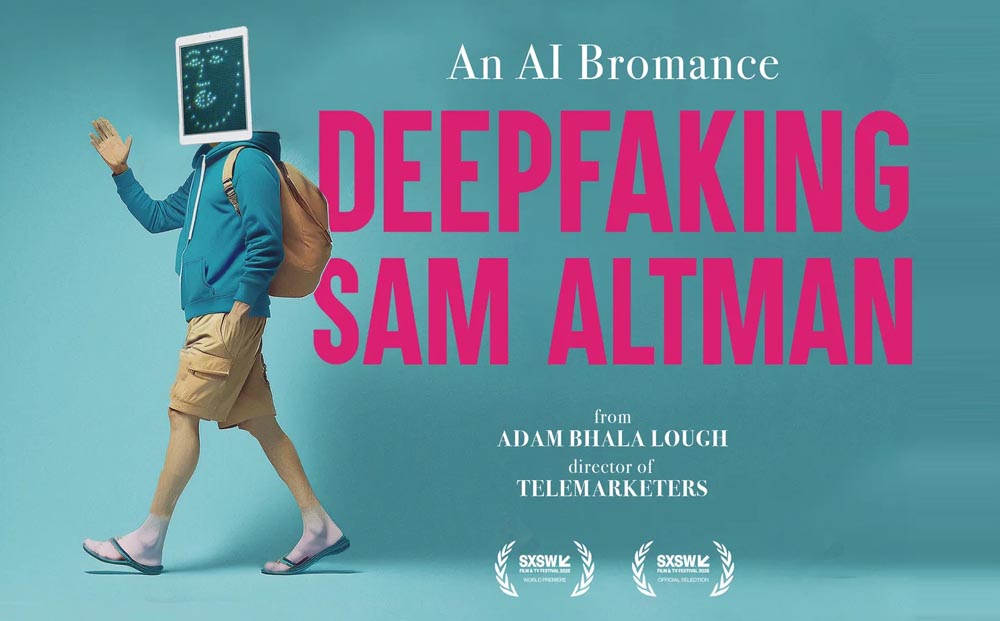

I missed the west side screening, might try to catch noho. Can’t tell from the trailer if it will be good or not.

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

“AI blunder in Aurskog-Høland [Norway] – children received water bills”

The sources linked are all in norwegian, so you’ll have to translate them yourself if you’re interested, but Patricia’s summary seems reasonable. The government authority in question had to hire extra people to undo the mess that the ai system caused. There’s a commercial vendor involved somewhere, but if they were named I didn’t spot it.

Patricia Aas 🐢🏳️🌈 (@patigallardo.bsky.social)

«AI blunder in Aurskog-Høland [Norway] – children received water bills»

Bluesky Social (bsky.app)

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

Amazon’s latest round of 16k layoffs for AWS was called “Project Dawn” internally, and the public line is that the layoffs are because of increased AI use. AI has become useful, but as a way to conceal business failure. They’re not cutting jobs because their financials are in the shitter, oh no, it’s because they’re just too amazing at being efficient. So efficient they sent the corporate fake condolences email before informing the people they’re firing, referencing a blog post they hadn’t yet published.

It’s Schrodinger’s Success. You can neither prove nor disprove the effects of AI on the decision, or if the layoffs are an indication of good management or fundamental mismanagement. And the media buys into it with headlines like “Amazon axes 16,000 jobs as it pushes AI and efficiency” that are distinctly ambivalent on how 16k people could possibly have been redundant in a tech company that’s supposed to be a beacon of automation.

-

The sad thing is I have some idea of what it’s trying to say. One of the many weird habits of the Rationalists is that they fixate on a few obscure mathematical theorems and then come up with their own ideas of what these theorems really mean. Their interpretations may be only loosely inspired by the actual statements of the theorems, but it does feel real good when your ideas feel as solid as math.

One of these theorems is Aumann’s agreement theorem. I don’t know what the actual theorem says, but the LW interpretation is that any two “rational” people must eventually agree on every issue after enough discussion, whatever rational means. So if you disagree with any LW principles, you just haven’t read enough 20k word blog posts. Unfortunately, most people with “bounded levels of compute” ain’t got the time, so they can’t necessarily converge on the meta level of, never mind, screw this, I’m not explaining this shit. I don’t want to figure this out anymore.

I know what it says and it’s commonly misused. Aumann’s Agreement says that if two people disagree on a conclusion then either they disagree on the reasoning or the premises. It’s trivial in formal logic, but hard to prove in Bayesian game theory, so of course the Bayesians treat it as some grand insight rather than a basic fact. That said, I don’t know what that LW post is talking about and I don’t want to think about it, which means that I might disagree with people about the conclusion of that post~

-

Chris Lintott (@chrislintott.bsky.social):

We’re getting so many journal submissions from people who think ‘it kinda works’ is the standard to aim for.

Research Notes of the AAS in particular, which was set up to handle short, moderated contributions especially from students, is getting swamped. Often the authors clearly haven’t read what they’ve submitting, (Descriptions of figures that don’t exist or don’t show what they purport to)

I’m also getting wild swings in topic. A rejection of one paper will instantly generate a submission of another, usually on something quite different.

Many of these submissions are dense with equations and pseudo-technological language which makes it hard to give rapid, useful feedback. And when I do give feedback, often I get back whatever their LLM says.

Including the very LLM responses like ‘Oh yes, I see that <thing that was fundamental to the argument> is wrong, I’ve removed it. Here’s something else’

Research Notes is free to publish in and I think provides a very valuable service to the community. But I think we’re a month or two from being completely swamped.

that kinda tracks the type of replies @corbin@awful.systems is seeing to his lobsters challenge, doesn’t it?

-

Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid.

Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned so many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. What a year, huh?)

Excellent BSky sneer about the preposterous “free AI training” the Brits came up with. 10/10, quality sneer.

-

The Wikipedia article is cursed

I’d say even the part where the article tries to formally state the theorem is not written well. Even then, it’s very clear how narrow the formal statement is. You can say that two agents agree on any statement that is common knowledge, but you have to be careful on exactly how you’re defining “agent”, “statement”, and “common knowledge”. If I actually wanted to prove a point with Aumann’s agreement theorem, I’d have to make sure my scenario fits in the mathematical framework. What is my state space? What are the events partitioning the state space that form an agent? Etc.

The rats never seem to do the legwork that’s necessary to apply a mathematical theorem. I doubt most of them even understand the formal statement of Aumann’s theorem. Yud is all about “shut up and multiply,” but has anyone ever see him apply Bayes’s theorem and multiply two actual probabilities? All they seem to do is pull numbers out of their ass and fit superexponential curves to 6 data points because the superintelligent AI is definitely coming in 2027.

-

I know what it says and it’s commonly misused. Aumann’s Agreement says that if two people disagree on a conclusion then either they disagree on the reasoning or the premises. It’s trivial in formal logic, but hard to prove in Bayesian game theory, so of course the Bayesians treat it as some grand insight rather than a basic fact. That said, I don’t know what that LW post is talking about and I don’t want to think about it, which means that I might disagree with people about the conclusion of that post~

I think Aumann’s theorem is even narrower than that, after reading the Wikipedia article. The theorem doesn’t even reference “reasoning”, unless you count observing that a certain event happened as reasoning.

-

The Wikipedia article is cursed

“you should watch [Steven Pinker’s] podcast with Richard Hanania” cool suggestion scott

-

“you should watch [Steven Pinker’s] podcast with Richard Hanania” cool suggestion scott

Surely this is a suitable reference for a math article!