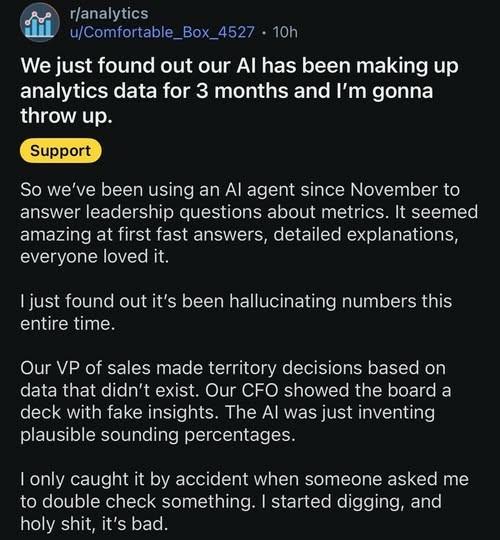

"I just found out that it's been hallucinating numbers this entire time."

-

Hallucinating numbers, you say?

It must be learning from the current American administration..

-

-

@Natasha_Jay someone is getting fired

-

-

@Natasha_Jay This part in the original post is fantastic:

“The worst part I raised concerns about needing validation in November and got told I was slowing down innovation.”

hxxps://www.reddit.com/r/analytics/comments/1r4dsq2/we_just_found_out_our_ai_has_been_making_up/

@drahardja @Natasha_Jay I believe it’s supposed to be h*tt*ps

-

@Natasha_Jay It seems to me that AI tools have been released prematurely to generate revenue from the massive investments AI tech companies are making. This type of hallucination could cause serious damage to any business, organisation or person using it. Who is accountable in the end? Given the history of big tech's social media platforms and the harm theyare causing you can bet they'll accept no responsibility.

-

"... just inventing plausible sounding [answers]"

This shit is so tiring - that is literally all any AI is even *meant* to do. They are not even designed to give correct answers to questions, but just examples of what a plausible answer could sound like.

(edit: sorry, I know I'm likely preaching to the choir here, but it's just so fucking tiring seeing people surprised by this crap.)

-

@Natasha_Jay Bwahahahahahaha

-

@Natasha_Jay .. and you really want to blame the technology for this... If there was no process for checking the facts that were used , it is simply bad implementation, everyone knows you have to check for or do something against hallucinations with GenAI.

-

-

"... just inventing plausible sounding [answers]"

This shit is so tiring - that is literally all any AI is even *meant* to do. They are not even designed to give correct answers to questions, but just examples of what a plausible answer could sound like.

(edit: sorry, I know I'm likely preaching to the choir here, but it's just so fucking tiring seeing people surprised by this crap.)

@jmcclure Keep preaching

@Natasha_Jay

@Natasha_Jay -

@ZenHeathen @Natasha_Jay the thing is, there is no change-over "period" once you use this daily, your org begins lose its institutional memory

@Bredroll @ZenHeathen @Natasha_Jay And there will be further, probably unnoticed, changeover periods, when the technology less or more silently provider changes (or even the org changes provider, …) their services, e.g., the used model is changed.

-

So I asked the higher ups to double check this $11M investment they made to run the show in our department. Everything promised was made worse with zero improvements. We lost a lot of experience and money. Almost lost me too, but I'm a fixer and they are going to pay me a lot of overtime...

-

@Natasha_Jay so much karma

-

@Natasha_Jay This part in the original post is fantastic:

“The worst part I raised concerns about needing validation in November and got told I was slowing down innovation.”

hxxps://www.reddit.com/r/analytics/comments/1r4dsq2/we_just_found_out_our_ai_has_been_making_up/

@drahardja

Oh yes, that’s a beauty!! Just shaking my head in disbelief here…

️

️

“The worst part I raised concerns about needing validation in November and got told I was slowing down innovation.” -

I can't wait to deploy AI on the battlefield! And for policing.

-

What would be amusing is them having greater success using bullshit data than whoever was previously correlating stuff :^D

@lxskllr more likely it will have similar results to that of the media industry who changed their entire industry based on fake data provided to them by Facebook.

-

@Natasha_Jay .. and you really want to blame the technology for this... If there was no process for checking the facts that were used , it is simply bad implementation, everyone knows you have to check for or do something against hallucinations with GenAI.

@ErikJonker then where's the productivity gain promised with AI if you still have to do the work to get the numbers you trust? Why take on the additional cost at that point?

If you had an employee who was constantly lying to you, you'd fire them.

-

"... just inventing plausible sounding [answers]"

This shit is so tiring - that is literally all any AI is even *meant* to do. They are not even designed to give correct answers to questions, but just examples of what a plausible answer could sound like.

(edit: sorry, I know I'm likely preaching to the choir here, but it's just so fucking tiring seeing people surprised by this crap.)

@jmcclure so many people don't understand this.

-

@ErikJonker then where's the productivity gain promised with AI if you still have to do the work to get the numbers you trust? Why take on the additional cost at that point?

If you had an employee who was constantly lying to you, you'd fire them.

@GreatBigTable also true, you have to make a rational business decision, as with every other technology